When you do video production of any kind, you quickly run into two problems, namely having the files organized so that you can effectively find them, and secondly, the insane volumes of data to manage. I sortof knew that movie studios had massive data volumes, but I didn’t realize that even a moderate operation like ours would produce so much data.

Continue reading “Storage and backups”Category: Security

Whose side are they on?

This is an interview in Wired with the principal deputy director of national intelligence (of the US). It’s all about how the tech industry should work closer with the goverment (US). It starts out in classic paranoid fashion:

“SUE GORDON, THE principal deputy director of national intelligence, wakes up every day at 3 am, jumps on a Peloton, and reads up on all the ways the world is trying to destroy the United States.1“

…and goes on to:

“I think there’s a lot of misconception about those of us who work in national security and intelligence,” she says. “We swear to uphold and defend the Constitution of the United States. That means we believe in and swear to uphold privacy and civil liberties.”

Not once is there a mention in the text that “the” government is actually just one government. Not once does Gordon or the reporter reflect on that working closely with the (US) government means taking sides against the rest of the world. There is less and less common interest between the US government and the rest of the western world, not to mention the non-western world, so any initiative the tech companies join in with the US government is a big red flag for the market outside the US. Think Huawei.

I’m pretty certain the major tech companies do realize that the majority of their customers are not US patriots, and that being too cozy with the US intelligence services may not be good for business. Increasingly so.

I’m amazed, though, that this wasn’t even considered when interviewing for and writing that article. Maybe it would be a good idea not to distribute arguments like this beyond the US. Why do they even let us read “patriotic” claptrap like this? I can’t imagine the tech companies liking it much.

Retrospect setup, tips and tricks

While setting up Retrospect, I ran into a number of problems, most of which I think I solved. I’m just noting some of them here, so I can find them again. Or so you can find them, perhaps. I’ll add to this post as I encounter more oddities.

Is there life after Crashplan?

Now that Crashplan for home is gone, or at least not long for this world, a lot of people will need to find another way of backing up their stuff. It’s tempting to get angry, and there are reasons to be, but in the end you have to forget about all that and move on. Even though you may have months, or even a year, before Crashplan stops working, there is another reason you have to get something up right now, namely file histories.

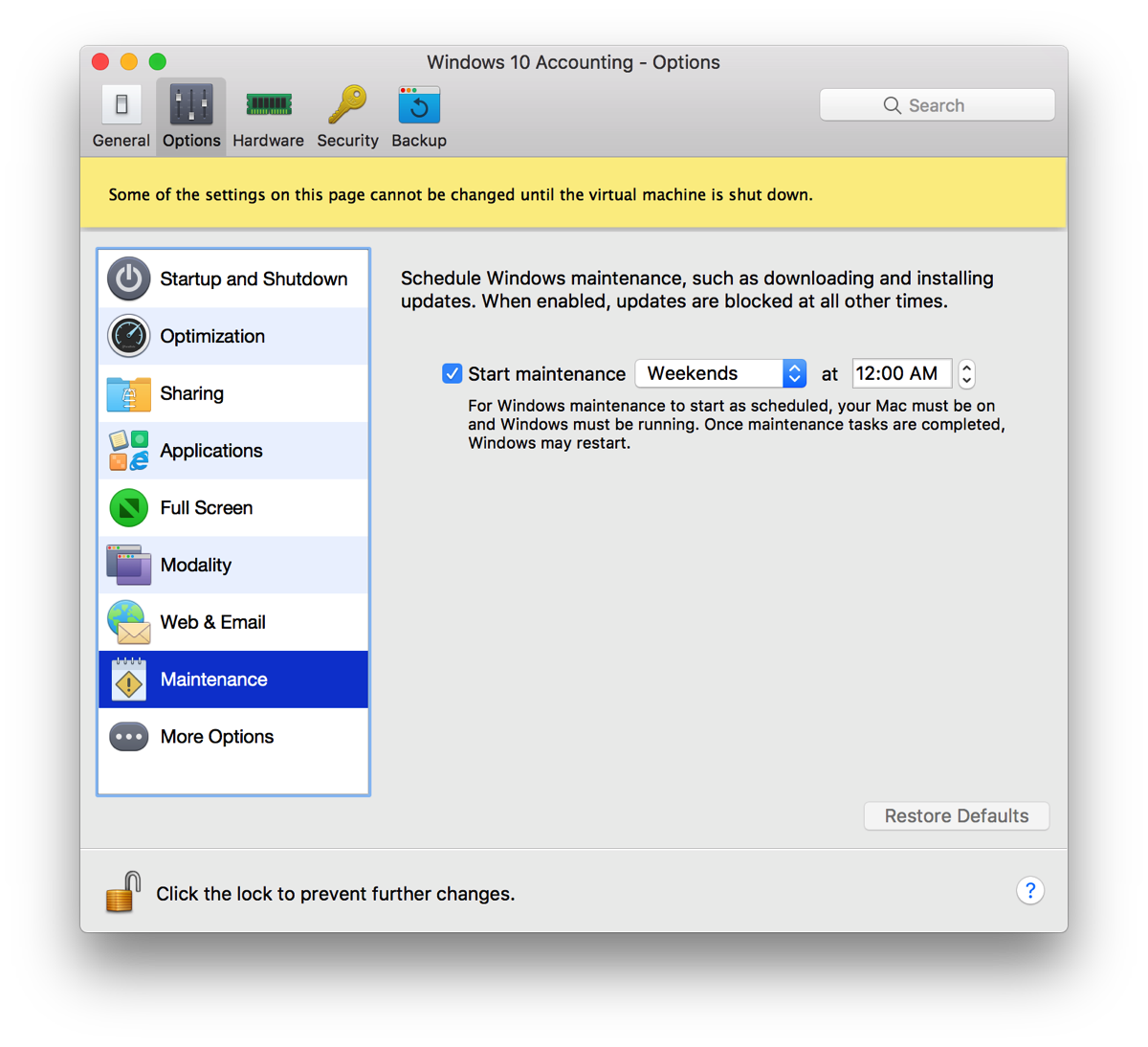

Parallels protects us from Microsoft

I just discovered that Parallels v12 has a really nice feature, namely to stop Windows from doing updates except for a predetermined period. Brilliant! It’s also a sad testament to what Microsoft has done when it comes to respect for the user’s time and work.

“App translocation”

When applications are in “quarantine” on OSX after being downloaded, they are run in a kind of sandbox; they’re “translocated”. You don’t really see this, but weird things then happen. For instance, Little Snitch won’t let you create “forever” rules on the fly, claiming your app isn’t in “/Applications”, which it clearly is if you check in Finder.

The problem is that the extended quarantine attribute is set, and needs to be reset (at least if you trust the application). Too bad Apple didn’t provide a GUI way of doing that, so here goes the magic incantation (assuming WebStorm is the problem in the example):

First check if the attribute is set:

xattr /Applications/Webstorm.app/

com.apple.quarantine

Then if you see that it is, reset it:

xattr -d com.apple.quarantine /Applications/Webstorm.app/

…and there you go. Life is good again.

Checking phone status

Just found out about two services where you can check if any mobile phone is lost or stolen by entering the IMEI:

Check on your downloads

This is a neat service that lists torrents downloaded for any given IP. See what your kids do while you’re not watching. Or maybe your neighbours? It’s a little bit scary.

Medical IT crap, the why

(Continuing from my previous post.)

I think the major problem is that buyers specify domain functionality, but not the huge list of “non-functional requirements”. So anyone fulfilling the functional requirements can sell their piece of crap as lowest bidder.

Looking at a modern application, non-functional requirements are stuff like resilience, redundancy, load management, the whole security thing, but also cut-and-paste in a myriad of formats, a number of import and export data formats, ability to quick switch between users, ability to save state and transfer user state from machine to machine, undo/redo, accessibility, error logging and fault management, adaptive user interface layouts, and on and on.

I’d estimate that all these non-functional requirements can easily be the largest part of the design and development of a modern application, but since medical apps are, apparantly, never specified with any of that, they’re artificially cheap, and, not to mince words, a huge pile of stinking crap.

It’s really easy to write an app that does one thing, but it’s much harder and more expensive to write an app that actually works in real environments and in conjunction with other applications. So, this is on the purchasers’ heads. Mainly.

A day in the life of “medical IT security”

This article is an excellent description of some of the serious problems related to IT security in healthcare.

Even though medical staff actively circumvent “security” in a myriad inventive ways, it’s pretty clear that 99% of the blame lies with IT staff and vendors being completely out of touch with the actual institutional mission. To be able to create working and useable systems, you *must* understand and be part of the medical work. So far, I’ve met very few technologists even remotely interested in learning more about the profession they’re ostensibly meant to be serving. It boggles the mind, but not in a good way.

Some quotes:

“Unfortunately, all too often, with these tools, clinicians cannot do their job—and the medical mission trumps the security mission.”

“During a 14-hour day, the clinician estimated he spent almost 1.5 hours merely logging in.”

“…where clinicians view cyber security as an annoyance rather than as an essential part of patient safety and organizational mission.”

“A nurse reports that one hospital’s EMR prevented users from logging in if they were already logged in somewhere else, although it would not meaningfully identify where the offending session was.”

This one, I’ve personally experienced when visiting another clinic. Time and time again. You then have to call back to the office and ask someone to reboot or even unplug the office computer, since it’s locked to my account and noone at the office is trusted with an admin password… Yes, I could have logged out before leaving, assuming I even knew I was going to be called elsewhere then. Yes, I could log out every time I left the office, but logging in took 5-10 minutes. So screen lock was the only viable solution.

“Many workarounds occur because the health IT itself can undermine the central mission of the clinician: serving patients.”

“As in other domains, clinicians would also create shadow systems operating in parallel to the health IT.”

Over here, patients are given full access to medical records over the ‘net, which leads physicians to write down less in the records. Think this through to its logical conclusion…