Oh, wow, this was crazy. What I needed to get done is to have a Juniper SSG-5 firewall (which runs Netscreen OS 6.2) authenticate users from the FreeRadius server that runs by default in OSX Snow Leopard server (10.6.3). And I needed the SSG-5 to differentiate depending on groups on Open Directory on the OSX. But, man, is this poorly documented… the only thing you find in the OSX documentation is how to get an accesspoint to allow users in. That’s it. Not good enough.

You can click any of the images in this post to see the screenshots full size

First, a list of documents you may need, or I may need later and don’t want to lose:

dictionary.freeradius.internal – an Apple document listing the attributes passed to FreeRadius.

A usegroup message with some useful examples

Using OSX Radius with third party devices – has some info on hunt groups

Make sure radius is running

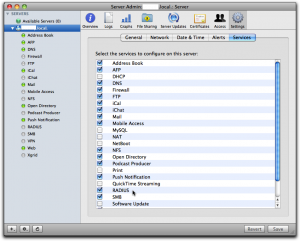

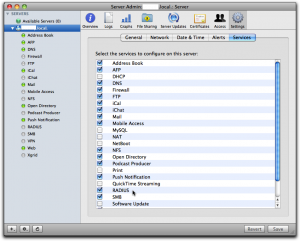

Via Server Admin, make sure Radius is selected in the services tab so it occurs in your list of services in the left pane.

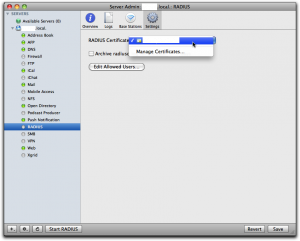

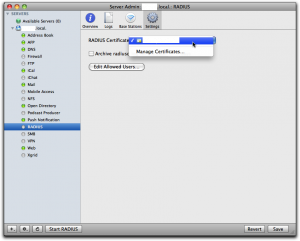

Then select “Radius” in the left panel, select “Settings” and click the dropdown for “RADIUS Certificate”. There you should either select a cert you already have installed on the server, or else select “Manage Certificates…” to go and create one. I already had one, and I had it created by CAcert, a free service for certificates of all kinds.

When you’ve got the cert sorted out, click the button “Edit Allowed Users…” and you’ll get to this screen:

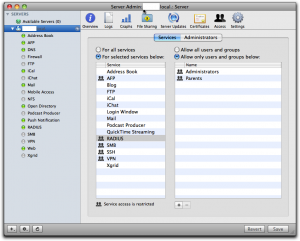

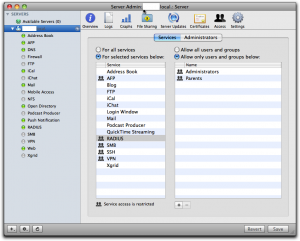

See to it that you’ve selected “For selected services below:” in the left half of the right pane and that “RADIUS” is selected in the list. Then use the plus sign below right to add all groups you want to manage through Radius. Don’t forget to click the “Save” button when you’re done.

If you have any regular wireless access points you want to add, you can do that through the Server Admin as well, but you can’t add any other devices this way.

Just to see if things are more or less right, try to start the Radius server and then check the logs. You can do that by selecting RADIUS in the left panel, click the “Logs” tab on top and then play with the “Start RADIUS” and “Stop RADIUS” button at the bottom of the screen:

If it complains about the lack of any clients, don’t bother. Just leave it off, since we’ll add clients through the command line shortly.

Once you’ve played with this for a while and are satisfied that it is not too bad, you can leave the Radius server off. We’ll start it from the command line later.

It’s important to understand that all the groups you select here, and only those groups, are copied over to the user database in the Radius server. Any users that are not in one of these groups cannot ever be enabled through Radius; they’re simply not seen by the Radius server.

Also important to understand is the fact that this is as far as Apple goes in its GUI implementation of Radius. That is, any user that is enabled for Radius this way can log in to any Radius enabled wireless access points on your net. They don’t make any distinction according to user or group as to what you can do, nor do they implement anything else but wireless access points. This means that for more sophisticated usage, you have to proceed on your own, largely through the command line and config files.

Add clients to radius

A “client” is a piece of equipment that will ask the radius server to authenticate users, so clients are accesspoints, firewalls, maybe switches and routers. Each of these pieces of equipment that you want to have call the radius server needs to be configured in the server with its IP number and a shared secret (password). This shared secred is the same on both sides, so each piece has its secret shared with the radius server, but each pairing has another shared secret. If you want to add just Apple supported wireless access points, you can do that through Server Admin, but for everything else you have to do it as follows.

To add a client to the radius server, you use the radiusconfig utility on the OSX server:

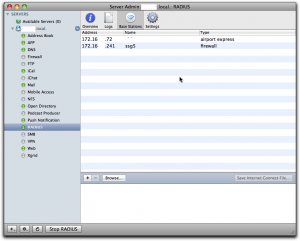

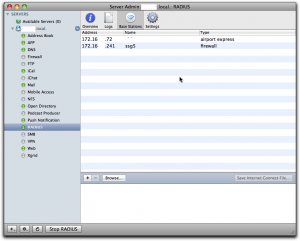

sudo radiusconfig -addclient 172.16.200.241 ssg5 firewall

After you enter this command, radiusconfig will ask you for the shared secret. Remember it, because this is the same secret we will need to enter in the SSG-5 later. A side note: the last parameter is the type and I gave it as “firewall”. As far as I can see, it’s purely descriptive and you can call it “bigbrownbear” for all the difference it makes.

If you check the list of “Base Stations” in Server Admin, you’ll should see this client in the list, at least if Radius is running:

Add the DEFAULT entries to the users file

Even though Radius users are held in an sql-lite database under OSX, the users file still does exist and is read. In this file, we can add in rules that will be processed for any user that is accepted by Radius, so we can add on values to be returned to the Radius client (in our case, the firewall). In the users file, you also have access to some information from Open Directory on OSX, so the users file is the place where information is transformed from OSX Open Directory to Radius clients. This is where the magic happens. We write all our rules for the magic user “DEFAULT”, which matches any user accepted by Radius. More than one rule may match a real user, and all of the matching rules will be applied.

Open the “/etc/raddb/users” file on the server with pico as root:

sudo pico /etc/raddb/users

In that file, towards the end, in among the other “DEFAULT” rules, add this one:

DEFAULT Group-Name == "Parents"

NS-User-Group = "majors"

What this rule does is that it checks if the user under OSX in open directory belongs to a group called “Parents” and if so it sends the NS-User-Group attribute with the value “majors” to the client, in this case our firewall. We’ll add another rule:

DEFAULT Group-Name == "Children"

NS-User-Group = "kids"

Note: I made the group names very different on the OSX Open Directory side (“Parents” and “Children”) and on the Radius client side (“majors” and “kids”) just to make it extra clear which group is which.

Set up authentication server on the SSG-5

Now we have to tell the SSG-5 how to find and talk to the Radius server. Log in on the SSG-5, go to “Configuration” – “Auth” – “Auth Servers” and click “New”.

Give the OSX Server a name, any name. It’s used to refer to this server when you create policies in the SSG-5 later. Enter the IP number, and select the “Auth” under “Account type”.

In the lower part, select “Radius” radio button, set the “RADIUS Port” to 1812, which is the default on the OSX FreeRadius server. Set the “RADIUS Accounting Port” to 1813, even though we don’t use accounting in this example. In the field “Shared Secret” you have to enter the same shared secret you entered while defining the SSG-5 client on the OSX Server using radiusconfig (see above). Leave the other fields unchanged and click “Save” at the bottom of the screen.

Add external groups to the SSG-5

We configured the OSX FreeRadius, via the DEFAULTS in the users file, to return groups “majors” and “kids” depending on who is logging on. Now we have to set up these groups on the SSG-5 as well. Go to “Objects” – “Users” – “External Groups” and click “New”.

In “Group Name”, write “majors”, then select the “Auth” checkbox for “Group Type”. Click the Ok button, then repeat the process for the “kids” group.

Now we do a policy

Now we finally arrive at the writing of policies that make use of the groups. In this example, I’m going to limit access to the dn.se site, Sweden’s largest newspaper, and I’ll only make it accessible to OSX users that belong to the “Parents” group on OSX. To do this, I’ll first have to make a policy that by default disallows everyone from accessing dn.se, then add a policy that allow members of the external group “majors” to access it anyway (remember that the OSX group “Parents” is translated to the group “majors” in the users file, so the external group is “majors” on the SSG-5). Let’s first do the policy that disallows all access to dn.se for everyone.

Go to “Policy” – “Policies”.

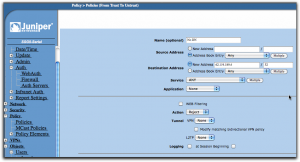

Select from “Trust” in upper left, to “Untrust” in upper right dropbox, then click “New”.

Use dig or nslookup from the command line to find the IP number for dn.se. As of the writing of this post, it was a single IP number: 62.119.189.4.

When the form opens, give the policy a reasonable name like “No DN”, leave the source address set to “Any”, but change the destination address to “62.119.189.4” and put in “32” in the mask field. The “Action” dropdown should be set to “Reject” and you can leave everything else as it was and click “Ok”.

Use the move tools in the policy list (far right) to move this policy to the top of the list. The policies are processed from top to bottom, so we want to make sure the rejection happens before any other policy may allow the connection.

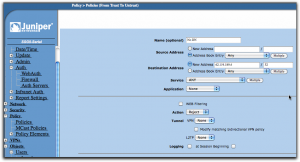

Add another policy from “Trust” to “Untrust”, then fill it in as in the following screen:

Give it another name, in this case “Allow DN”. You can now select the destination address from the address book entry dropbox so you don’t have to type it in, it’s just a convenience since the SSG-5 now knows about this IP from the previous policy. The “Action” dropbox should now be set to “Permit”.

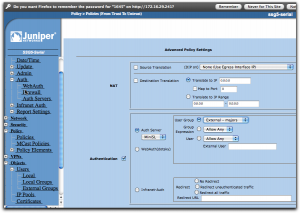

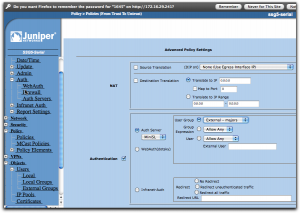

If this was all we did, we just simply nullified the previous policy, at least if we put this one above it in the policy list, and that would be pointless. Instead, click on the “Advanced” button at the bottom of the screen.

Now everything comes together. Enable “Authentication” by selecting that checkbox, then select “Auth Server” using the radio buttons. In the dropbox, select the auth server you created earlier, the MiniSL. Slightly to the right, you can select who is going to be authenticated and here you select “User Group”, then “External – majors”. If this selection isn’t available, check that you did define that external group as I described a bit earlier.

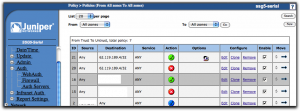

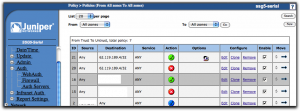

With all this done, save. In the list of policies, you should put this new policy at the top using the move tools in the last column so it ends up above the first policy we did that is set to reject connections to dn.se for everyone. The result should look like this:

Testing it all

If you started the Radius server through Server Admin, go there and stop it first. Log in to the OSX server and open a terminal shell. Start the Radius server in debug mode from here by:

sudo radiusd -X

This should get your Radius server running and you’ll see how it handles requests. Now go to a browser on any other machine on the local net and try to open dn.se. You should get a login dialog from the browser itself and if you provide a username and password from someone who is defined in the OSX Workgroup manager, is in the “Parents” group, then you should get access, else not.

I hope it works for you. If not, explore the raclient tool as well, since it’s very useful for finding configuration errors. Once it all works, stop the Radius server on the command line and go start it from Server Admin instead, so it runs as it normally would.

A little remark: if you change settings in the users file, you have to stop and start the Radius server again each time, else it won’t see the changes.

I’m planning to do a post on hunt groups as well, but I haven’t done them yet, so it could be a while.

Additional notes

You will find files with all the predefined attributes in the folder /usr/share/freeradius. Each type of equipment has its own file. The attribute names I used above come from the file “dictionary.netscreen”.